Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

SMILA/Specifications/Partitioning Storages

Contents

Implementing Storage Points for Data Backup and Reusing

Implementing Storage Points

Requirements

The core of the SMILA framework consists of a pipeline where data is pushed into a queue whence it is fed into data flow processors (DFP). The requirement for Storage Points is that it should be possible to store records in a specific “storage point” after each DFP. Storage Point in this case means some storage configuration where data is saved, for example it can be partition in local storage (please see Chapter 2 for more information) or some remote storage. Storage points should be configured in the following way:

- Each DFP should have a configuration for the storage point from where data will be loaded before the BPEL processing (“Input” storage point);

- Optionally it should be possible to configure the storage point where data should be stored after BPEL processing (“Output” storage point). If this configuration is omitted, data should not be stored to any storage point at all after BPEL processing;

- If “Input” and “Output” storage points have the same configuration, data in the “Input” storage point should be overridden after BPEL processing.

The goal for these modifications is that information that is stored to storage points can be accessed anytime later. This will solve following problems:

- Backup and recovery: It will be possible to make a backup copy of some specific state of data

- Failure recovery during processing: Some DFP involve expensive processing. With storage points it will be possible to continue processing from one of the previously saved states in case of DFP failure

- Reusing data collected from previous DFPs: Data that is a result of executing some DFP sequence can be saved to storage point and reused later

- Easy data management: It will be possible to easily manage saved states of data, for example delete or move some storage point that contains obsolete data

However, this all should not make the basic configuration of a SMILA system more complicated: If one does not care about multiple storages at all, it should not be necessary to configure storage points all over the configuration files, but everything should run OK on defaults.

Architecture overview

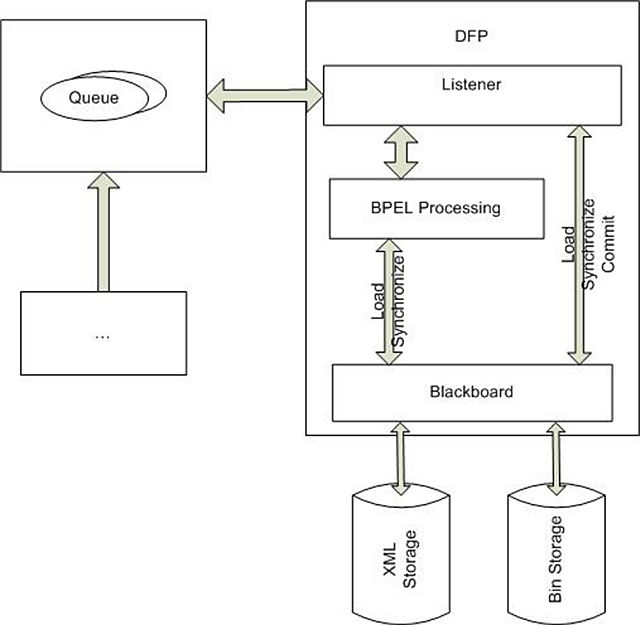

Following figure shows the overview of the core components of the SMILA framework:

To support the above requirements components shown on Figure 1 should be changed in the following way:

A. There should be a way to configure storage points for each DFP;

B. Blackboard service should be changed to handle storage points.

Proposed changes

Storage points configuration

It is proposed to use XML configuration file to configure storage points. Storage points will be identified by name and the whole configuration will look like following:

<StoragePoints> <StoragePoint Id=“point1“> <storage point parameters, eg. storage interface, partition etc> </StoragePoint> ... </StoragePoints>

For example:

... <StoragePoint Id=”point1”> <XmlStorage Service=”XmlStorageService” Partition=”A”/> <BinaryStorage Service=”BinaryStorageService” Partition=”B”/> </StoragePoint> ...

A User can define a default StorageID, that is every time used when no specific StorageID is defined

... <StoragePoint Id=”DefaultStoragePoint”> <XmlStorage Service=”XmlStorageService” Partition=”A”/> <BinaryStorage Service=”BinaryStorageService” Partition=”B”/> </StoragePoint> ...

To make configuration easier storages API can be normalized so that all storages will implement the same interface. (Some proposal on this subject was posted by Ivan into mailing list).

Alternative: Storage Point ID as OSGi service property

In this example we do not need a centralized configuration of storages and storage points, but just add a Storage Point ID to each Record Metadata/Binary Storage or as JMSProperty (which is discussed in the next section) as a OSGi service property, e.g in a DS component description of an binary storage service:

<component name="BinaryStorageService" immediate="true"> <service> <provide interface="org.eclipse.smila.binarystorage.BinaryStorageService"/> </service> <property name="smila.storage.point.id" value="point1"/> </component>

And in an (XML) Record Metadata storage service:

<component name="XmlStorageService" immediate="true"> <implementation class="org.eclipse.smila.xmlstorage.internal.impl.XmlStorageServiceImpl"/> <service> <provide interface="org.eclipse.smila.xmlstorage.XmlStorageService"/> <provide interface="org.eclipse.smila.storage.RecordMetadataStorageService"/> </service> <property name="smila.storage.point.id" value="point1"/> </component>

(note that we also introduced a second interface here that is more specialized for storing and reading Record Metadata for a Blackboard than the XmlStorageService, but does not enforce that the service uses XML to store record metadata).

Then the Blackboard wanting to use Storage Point "point1" would just look for a RecordMetadataStorageService and a BinaryStorageService (for attachments) having the property set to "point1". There would be no need to implement a central StoragePoint configuration facility.

Configuring storage points for DFP

As shown on the Figure 1, Listener component is responsible for getting Record from JMS queue, loading record on the Blackboard and executing BPEL workflow. Storage points cannot be configured inside the BPEL Workflow because the same BPEL Workflow can be used in multiple DFPs and hence can use different storage points. Thus it’s proposed to configure storage point IDs into Listener rules. With such configuration it will be possible to have separate storage points configurations for each workflow and all DFPs will be configured in a single place.

There are two ways of how storage points can be configured:

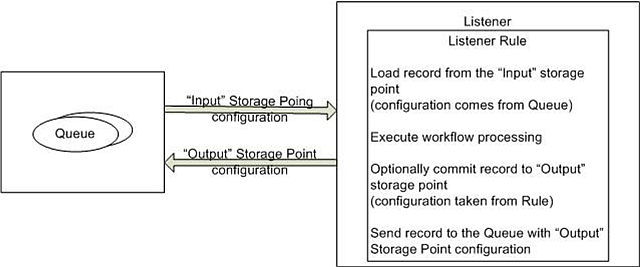

1. Listener rule contains configuration only for “Output” storage point. The “Input” storage point Id is read form the queue. After processing is finished “Output” storage poitn Id is sent back to the queue and becomes “Input” configuration for the next DFP. The whole process is shown on the following picture:

The advantage of this way is that user needs to carry only about “Output” storage point configuration because “Input” storage point configuration will be automatically obtained from the queue. On the other hand, it can greatly complicate management, backup and data reusing tasks because it will be not possible to find out which storage point was used as “Input” when particular Listener rule was applied.

Example: Listener Config:

<Rule Name="ADD Rule" WaitMessageTimeout="10" Workers="2" TargetStoragePoint="p1"> ... </Rule>

The source targetStorePoint is transferred over the Queue by storing it in the Record as MetaData or by sending it as JMSProperty (we used JMSProperties right now for DataSourceID by now) with the Record.

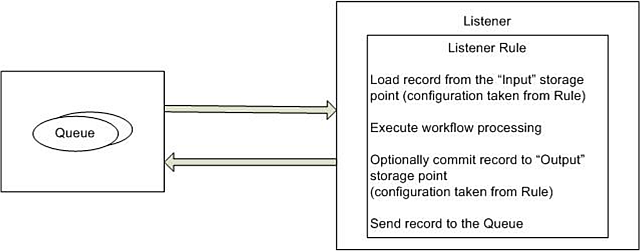

2. Listener rule contains configuration for both “Input” and “Output” partitions. In this case storage points configuration is not sent over the queue:

The advantage of this way is that for some particular rule it will always be possible to find out which “Input” and “Output” storage points were used for this rule. In this case it’s up to user to make sure that provided configuration is correct and consistent. This greatly simplifies backup and data management tasks so it’s proposed to implement this way of configuration. Also with this way configuration will be a little more complex, for example if the same rule should be applied in two different DFP sequences but data should be loaded from different “Input” storage points , it will be required to create two rules for each “Input” partition.

Example: Listener Config:

<Rule Name="ADD Rule" WaitMessageTimeout="10" Workers="2" TargetStoragePoint="p1" SourceStoragePoint="p2"> ... </Rule>

Note: Maybe it could even be possible (and useful?) to implement both: Default "input" storage points could be defined in Listener Rules, while a storage point ID could be passed with the message to override the default?

Rules regarding the Alternative with OSGI-Properties

Example: Listener Config:

It's completely the same:

<Rule Name="ADD Rule" WaitMessageTimeout="10" Workers="2" TargetStoragePoint="p1" SourceStoragePoint="p2"> ... </Rule>

To find the services providing the named storage points the DFP has to lookup services for the appropriate interfaces (RecordMetadataStorageService and BinaryStorageService) that have the specific storage point names set as property "smila.storage.point.id". It is not necessary to know the name of the service registration or to specify two separate names for Record and Binary storage service.

Passing the Storage-Location to BPEL/Pipelets/Blackboard

After Listener obtains storage point configuration it should pass this configuration to the Blackboard so that records from that storage point can be loaded on the Blackboard and processed by BPEL workflow. It can be done in the following different ways ( this can be combined with the both upper solutions ):

- Storage point configuration passed as a Record Id property: The advantage of this approach is that it will always be possible to find out easily to which storage point this particular record belongs to. Disadvantage is that record Id is immutable object to be used as a hash key and changing Id properties during processing can be not a good idea. (would best apply to option 1 in the above section)

- Storage point configuration passed as a Record Metadata: In this case an attribute or annotation containing the storage point ID should be added to the Record metadata before processing.

- Storage point configuration passed separately from the record: In this case record won’t contain any information about storage points configuration into itself: E.g. in the case where the listener rules do not contain the input storage porint, it could be passed in the queue messsage as a message property. This has the advantage that the listener can also select messages by their storage point of the contained records (e.g., to manage load balancing, or because not all listeners have access to all storage points)

Therefore we propose to use the third option. Note that it is still possible in this case to store the storage point ID in record metadata for informational purpose (e.g. setting a field in the final search index to read the storage point ID after search). But the relevant storage point ID for the queue listener will be a message property.

Changes in the Blackboard service

There are following proposals for Blackboard service changes:

- Blackboard API will expose additional new methods that will allow working with storage points: This imposes to many changes to clients of the blackboard service, so we do not want to follow this road. Further details omitted for now.

- Blackboard API won’t be changed.

In this case we introduce a new BlackboardManager service that returns references to the actual Blackboard instances using the default or a named storage point:

interface BlackboardManager { Blackboard getDefaultBlackboard(); Blackboard getBlackboardForStoragePoint(String storagePointId); } interface Blackboard { <contains current Blackboard API methods> }

With this configuration correct reference of the Blackboard object should be passed to BPEL workflow each time workflow is executed. This can be done by WorkflowProcessor that will send the right Blackboard to the BPEL server. In their invocation, Pipelets and Processing Services get the Blackboard instance to be used from the processing engine anyway, so they will continue working with Blackboard in the same way like it is implemented now.

Thus, the WP process(…) method should be enhanced to accept the Blackboard instance as an additional method argument instead of being statically linked to a single blackboard:

Id[] process(String workflowName, Blackboard blackboard, Id[] recordIds) throws ProcessingException;

This resembles the Pipelet/ProcessingService API. However, a difficulty of this may be to find a way to pass the information about which blackboard is to be used in a pipelet/processing service invocation around the integrated BPEL engine to the Pipelet/ServiceProcessingManagers. But I hope this can be solved.

Partitions

Requirements

The requirement for Partitions is that xml storage and binary storage should be able to work with ‘partitions’. This means that storages should be able to store data to different internal partitions.

Changes in Storages API

Currently SMILA operates with two physical storages – xml storage and binary storage. API of both storages should be extended to handle partitioning. API should provide methods that will allow getting data from specified partition and saving data to specified partition. Partitioning configuration should be passed as an additional parameter:

_xssConnection.getDocument(Id, Partition); _xssConnection.addOrUpdateDocument(Id, Document, Partition);

For the first implementation storages will work with data in partitions in the following way:

- With xml storage each partition will contain its own copy of the record Document.

- With binary storage each partition will contain its own copy of the attachment.

This behavior can be improved for better performance in further versions. For more information please see next section.

Alternative implenmentation using OSGi services

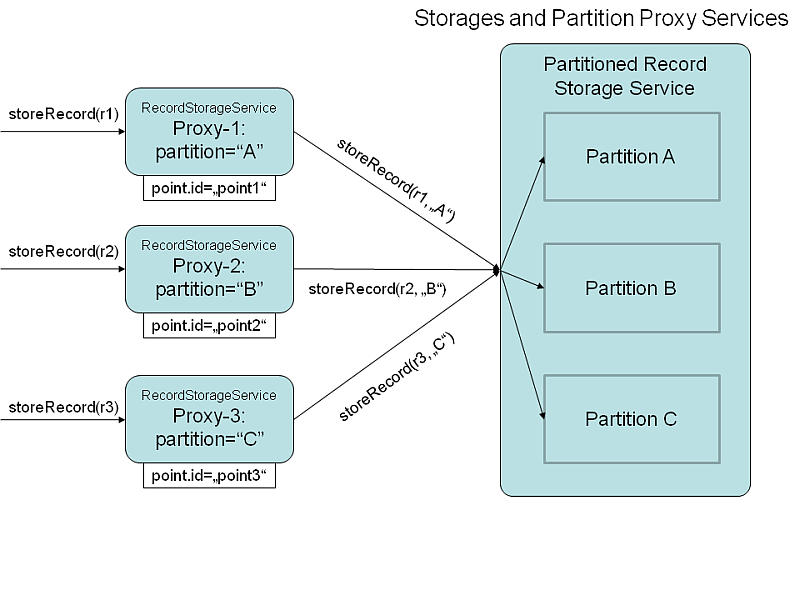

If we use the implementation of storage points using OSGi service properties described above in section "Alternative: Storage Point ID as OSGi service properties" we can use this to hide partitions completely from clients: In this case a storage service that wants to provide different partitions could register one "partition proxy" OSGi service for each partition that each have its own storage point ID, provide the correct storage interface (binary/record metdata/XML), but do not store data on their own, but just forward requests to the "master" storage service by just adding the partition name. This proxy service creation can be done programmatically and dynamically by the master service when a new partition is created (via service configuration or management console) so it's not necessary to create a DS component description for each partition.

The following figure should illustrate this setup:

This way no client would ever need to use additional partition IDs when communicating with a storage service, and storages that cannot provide partitions do not need to implement methods with partition parameters that cannot be used anyway.

Proposed further changes

With binary storage attachments can have a big size (for example, when crawling video files), therefore creating actual copy for each partition can be ineffective and can cause serious performance issues. As a solution for this problem binary storage should not create an actual attachment copy for each partition but rather keep reference to actual attachment when attachment was not changed from one partition to another.

Anyway, this solution can cause some problems too:

- Problems can occur if backup job is being done with some external tool that is not aware of references. This problem should not generally happen because backups will rather be done with properly configured tool;

- Some pipelet can change Attachment1 into Partition 1, while Partition 2 should still keep old version of attachment. In this case there should be some service that will be monitoring references consistency.