Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

SMILA/Project Concepts/IRM

Contents

- 1 Description

- 2 Discussion

- 3 Technical proposal

Description

Work out the concept for IRM interface. Cover the aspects of Agents/Crawlers and partly Connectivity in it.

Discussion

see IRMDiscussion

Technical proposal

The basic idea is to provide a framework to easily integrate data from external systems via Agents and Crawlers. The processing logic of the data is implemented once in so called Controllers, which use additional functionality provided by other components. To integrate a new external data source only a new Agent or Crawler has to be implemented. Implementations for Agents/Crawlers are NOT restricted to Java only\!

Technologies

Here is an overview of the suggested technologies. Other technologies might be suitable as well and a switch should have only minor impacts on the concepts described below.

Architecture

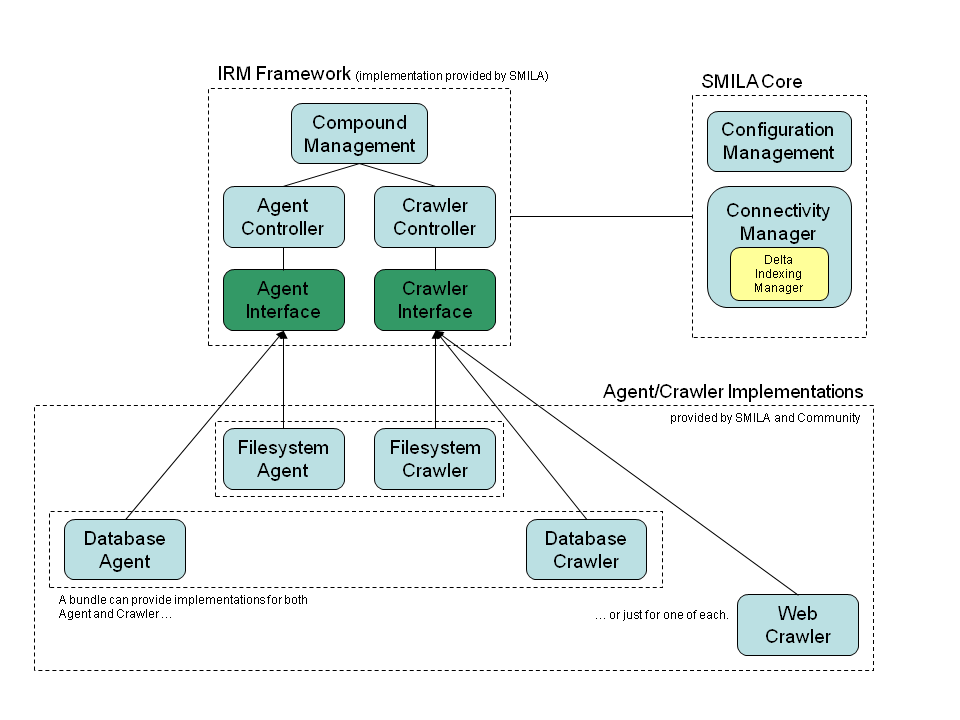

The chart shows the architecture of the IRM framework with it's plugable components (Agents/Crawlers) and relationship to the SMILA entry point Connectivity Module.

The IRM Framework is provided and implemented by SMILA. Agents/Crawlers can be integrated easily by implementing the defined interfaces. An advanced implementation might support even both interfaces.

Agent Controller

The Agent Controller implements the general processing logic common for all Agents. It's service interface is used by Agents to execute a add/update/delete action. It has references to

- ConfigurationManagement: to get Configurations for itself and Agents

- Connectivity: as an entry point for the data for later processing by for example BPEL

- CompoundManagement: to delegate processing of compound documents to

- Delta Indexing Manager: this is only needed in conjunction with CompoundManagement, regular documents need not be checked. For regular documents an Agent knows what action to perform (add/update/delete) with the data. But elements of compound objects should be updated incrementally (e.g. a zip file is changed in the file system. With the delta indexing logic only those elements that actually changed are added/updated/deleted)

- Daniel Stucky : we agreed that DeltaIndexingManager should be used for all add/update requests by Agents

Agent

Agents monitor a data source for changes (add/update/delete) or are triggered by events (e.g. trigger in databases).

Crawler Controller

The Crawler Controller implements the general processing logic common for all Crawlers. It has no service interface. All needed functionality should be addressed by a configuration/monitoring interface. It has references to

- ConfigurationManagement: to get Configurations for itself and Crawlers

- Connectivity: as an entry point for the data for later processing by for example BPEL

- CompoundManagement: to delegate processing of compound documents to

- Delta Indexing Manager: to determine if a crawled object needs to be processed (because it is new or was modified) or can be skipped.

Crawler

A Crawler actively crawls a DataSource and provides access to collected data. {info:title=Useful Information} Premium Crawlers (provided by brox or empolis) should optimize their performance by using Producer/Consumer pattern internally. Where one thread gathers the information and stores it in an internal queue and the Crawler gets the data from the queue. Of course it depends on the data source if this pattern is applicable. At least for Filesystem and Web it works. {info} \\

Compound Management

Handles processing of compound objects (e.g. zip, chm, ...). It does NOT implement the "Process Compound Logic" described below\! This has to be done by the Controllers. See SMILA/Project_Concepts/CompoundManagement for details.

Connectivity Module

Stores content and metadata for later processing and generates Queue entry (best case: Queue only contains an ID) See SMILA/Project_Concepts/Connectivity for details.

Delta Indexing Manager

This is a Sub-Component of the Connectivity Module. Stores information about last modification of each document (even compound elements) and can determine if document has changed. The information about last modification could be some kind of HashToken. Each Crawler and the CompoundManagement should have it's own configurable way of generating such a token. For Filesystem it may be computed from last modification date and security information. For a database it may be computed over some columns. Some of it's functionality is exposed trough the Connectivity Module's API. See SMILA/Project_Concepts/Connectivity#Delta Indexing Manager for details.

Configuration Management

This not part of this specification. It is assumed that this component manages configurations for all kinds of services, e.g. DataSources for crawlers. Instead of the Controllers, each Agent/Crawler could have a reference to the Configuration Management. We separate business configuration (what a Agent/Crawler does) from the setup/deployment configuration (e.g. what Agents/Crawlers are connected to an according controller). A concept for the business configuration is here: SMILA/Project_Concepts/Index Order Configuration Schema.

Notes

- {color:red}Most components must provide a configuration and monitoring interface (at least Agent\- and CrawlerController), which should be based on snmp (which in turn could be based on JMX for java components).{color}

- We need some kind of MimeType detection to decide when to do compound processing. This could but does not have to be a separate component

- we have to provide a mechanism to restart aborted crawls on certain entry points. Such an entry point may be the last known successful queued element of a data source (ignoring elements of compound objects). What this entry points means to the Crawler is up to the Crawler. This is in addition to the logic provided by the Delta Indexing Manager, as it reduces runtime (especially interesting for mostly static content). Of course not all Crawlers / Data Sources may allow selection of an entry point. Therefore this logic should be implemented directly in the Crawler if possible and configured. No Crawler is foreced to implement this logic\! Changes on already indexed elemnts of a previous run are NOT considered\!. ThisThis feature could be realized by simply segmenting the data into small parts (e.g. not one root directory but multiple sub-sub directories) trough configuration alone, here are some basic implementation ideas:

- FileSystem: In this case the entry point would a file (absolute path). I assume that the elemnts of a directory are always ordered. So the crawling starts on the parent directory of this entry point file. It iterates trough it (ignoring all elements it finds) until it finds the entry point file. From now on all elemnts are returned by the crawler. So far all elemnts below the entry point can be found. However, there may be elements in the entry points parent folder sister folders. So this procedure has to be executed up the tree structure until the base directory is reached.

- Database: The only prerequisite is that the database returns the results of a query always in the same order, either a natural order provided by the database or an ORDER BY in the SQL statement. Then the entry point can be any unique id or key. Accessing the entry point can be done in the SQL statement adding a statement restricting the result to all ids >= "entry point"

- Web: this is much more complicated, as the links in HTML files are not bi-directional.

Business Interfaces

- ConfigID: the ID of a configuration for an Agent or Crawler Job

- Config: a configuration for a Agent/Crawler

- DIRecord: a data structure containing only DeltaIndexing information of a single object provided by an agent/crawler

- Record: a data structure containing all data of a single object provided by an agent/crawler

Agent Controller

interface AgentController { void add(Record) // triggers the add process void update(Record) // triggers the update process void delete(Record) // triggers the delete process, the Record most likely will only contain the ID and no data }

CrawlerFactory (obsolete)

interface CrawlerFactory { Crawler createCrawler(Config) }

Creates and new Crawler object with the given Configuration and returns it.

Crawler

interface CrawlerController { /** * Returns an array of DIRecord objects (the maximum size of the array is determined by configuration or Crawler implementation) or null, if no more DIRecords exist */ DIRecord[] getNextDIRecords(); /** * Returns a Record object. The parameter pos refers to the position of the DIRecord from the DIRecord[] returned by getNextDIRecords(). */ Record getRecord(int pos); /** * Management method used to initialize a Crawler */ void initialize(Config config); /** * Management method used to close Conversations */ void close(); }

The Crawler's method getNextDIRecords() returns arrays of DIRecord objects. If no more DIRecord objects exist it returns null to signal end of "iteration". The maximum size of the returned DIRecord[] should be configurable. Some Crawlers may only allow for size=1, because of implementation limitations. For each DIRecord returned, the CrawlerController applies DeltaIndexingLogic. Only those DIRecord objects that are new or have changed are requested by method getRecord() as full Record objects, using the position in the DIRecord[] to identify the Record. This is used like a frame on current DIRecords. If getNextDIRecords() is called again, the frame moves on to the next elements.

CompoundManagement

see SMILA/Project_Concepts/CompoundManagement#Interfaces for details.

Connectivity

see SMILA/Project_Concepts/Connectivity#Interfaces for details.

Delta Indexing Manager

see SMILA/Project_Concepts/Connectivity#Interfaces for details.

Notes

- Monitoring functionality (e.g. what crawl jobs are running on a crawler and what is their state) is needed for each Component, but is not part of the SCA interface

- Only one crawl job per ConfigID must run at the same time \! If the crawl job finishes without fatal errors in inc update mode, then the list of obsolete IDs is deleted via according Data objects.

- More meaningful terms for Classes, Methods and Parameters are welcome.

How to implement the interfaces

The idea is that integration developers only have to implement Agents and Crawlers. The Agent\- and Crawler Controllers should be provided by the framework, prefferably developed in Java. Initial implementations for Components like Compound Processing and Delta Indexing Manager should also be provided by the framework, but it should be possible to replace them by other implementations. So most of the time developers will create new Agents and/or Crawlers. These can be implemented in any programming language that is supported by an SCA implementation. An implementation could support both Agent and Crawler interfaces, but should never be forced to implement both\! This makes development of a Agent/Crawler for a specific purpose much more easily.

Different implementations of the Delta Indexing Manager may be interesting in regards to performance and storage of this information, e.g.it could be stored directly in the search index.

Performance Evaluation

Regarding the discussions about Crawler (formerly Iterator) interface design, I setup some performance tests to compare a classic Iterator pattern (1 object at a time) against the proposal to use a list of objects. I tested it with a FilesystemCrawler that iterates on Delta-Indexing information only (an ID and a hash). The implementation uses a blocking Queue with a maximum number of elements (capacity). The Crawler itself returns a list of objects with a configurable maximum number of elements (step).

The test iterates over a directory containing 10001 html files (it does not open the file content, it just reads the path and last modification date). Using plain java objects (no SCA) it takes about 600 ms, no real difference is measurable. But we want to be able to distribute the software components. Therefore I also used SCA in the tests. SCA incures some overhead, as proxy objects are used with any binding mechanism.

- single VM

| capacity/step | 1/1 | 10/1 | 100/1 | 10/10 | 100/10 | 1000/100 |

|---|---|---|---|---|---|---|

| binding.sca | 22.7 sec | 22.7 sec | 22.7 sec | 2.8 sec | 2.6 sec | 765 ms |

| binding.rmi | 26.9 sec | 26.7 sec | 26.9 sec | 3.5 sec | 3.3 sec | 1.0 sec |

| binding.ws | 3,8 min | 3,8 min | 3,8 min | 2,2 min | 2,2 min | 1,9 min |

- separate VMs

| capacity/step | 1/1 | 10/1 | 100/1 | 10/10 | 100/10 | 1000/100 |

|---|---|---|---|---|---|---|

| binding.rmi | 29.8 sec | 29.0 sec | 30.2 sec | 4.3 sec | 3.6 sec | 1.3 sec |

| binding.ws | 4,5 min | 4,5 min | 4,5 min | 2,4 min | 2,4 min | 2,0 min |

- remote machines (KL-GT)

| capacity/step | 1/1 | 10/1 | 100/1 | 10/10 | 100/10 | 1000/100 |

|---|---|---|---|---|---|---|

| binding.rmi | 8,9 min | 9,1 min | 8,6 min | 54.5 sec | 59.0 sec | 6.8 sec |

| binding.ws | 75,4min | 72,6 min | 74,7 min | 7,4 min | 6,8 min | 2,4 min |

So, it's quite obvious that with SCA and especially with remote communication there is a big difference between single object iteration and lists. Therefore the Crawler interface should support lists. If a special implementation is not capable of supporting list it is still possible to set step to 1.

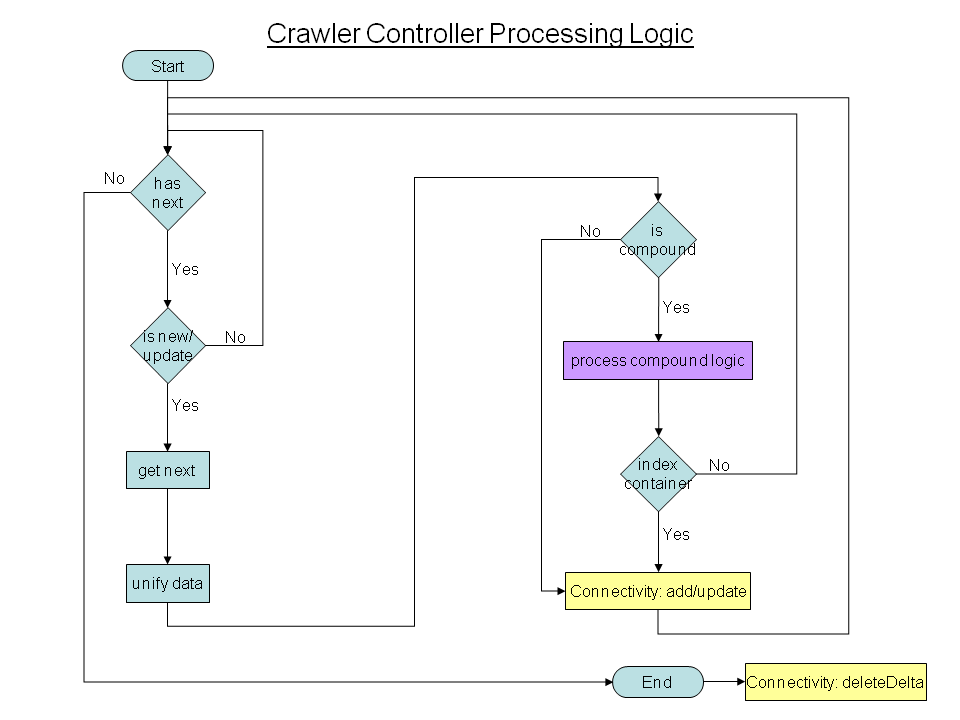

CrawlerController Processing Logic

This chart shows the CrawlerController Processing Logic:

The Process starts when a Carwler was created from a CrawlerFactory and received by the CrawlerController. The logic checks if there is a next data element. If so it checks if this data is new or has changed using the Delta Indexing Manager. If so, then the data is retrieved and unified in a generic representation. Then it is checked if the data is some kind of compound object (e.g. zip) and the Process Compound Logic is triggered. Otherwise the data is sent to the Connectivity Component. For Compound objects it may be configured to (not) send them to the Connectivity Component.

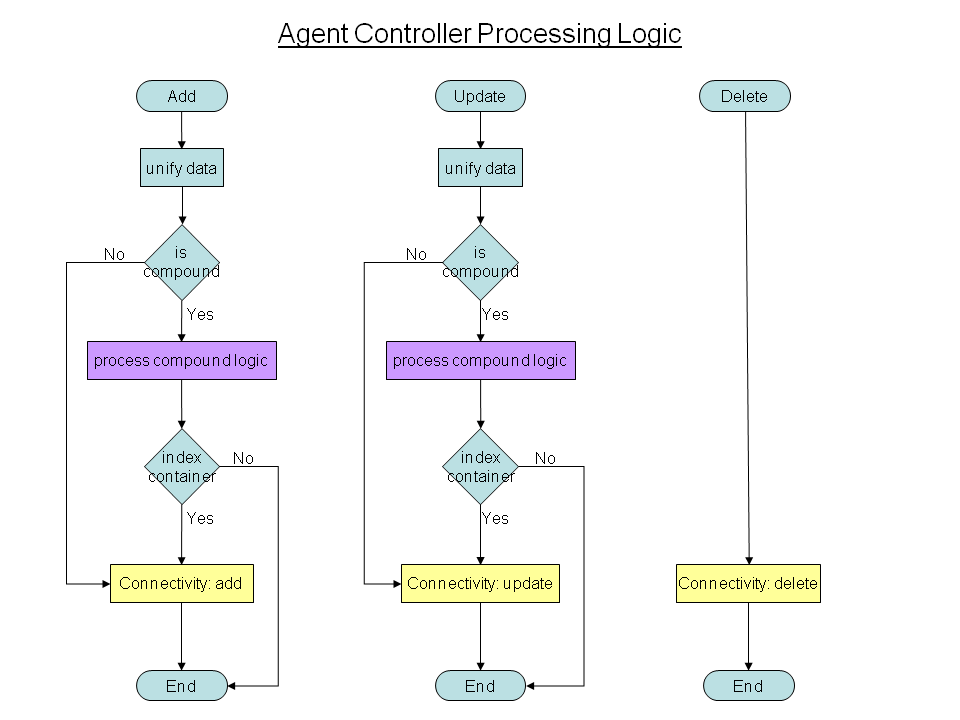

AgentController Processing Logic

This chart shows the AgentController Processing Logic:

The Agent Controller provides the 3 processes Add, Update and Delete.

- Add: At first the data provided by the Agent is unified. Then it is checked if the data is a compound object and if it's the case it is processed just as in the Crawler Processing logic. Otherwise\- Connectivity:add() is executed with the data.

- Update: is very similar to Add, the only difference is that at the end Connectivity:update() is called

- Delete: Here just a call to Connectivity:delete() is made.

{info:title=Note}There is a special case in Agent Controller Processing Logic during "Update" that is not shown in the chart: After the Process Compound Logic is done, we need to check if there are elements in the index that need to be removed (that were formerly included in this container). So we need functionality to perform deleteDelta() on a compound object and it's subelements in addition to performing it on a whole dataSource. {info}

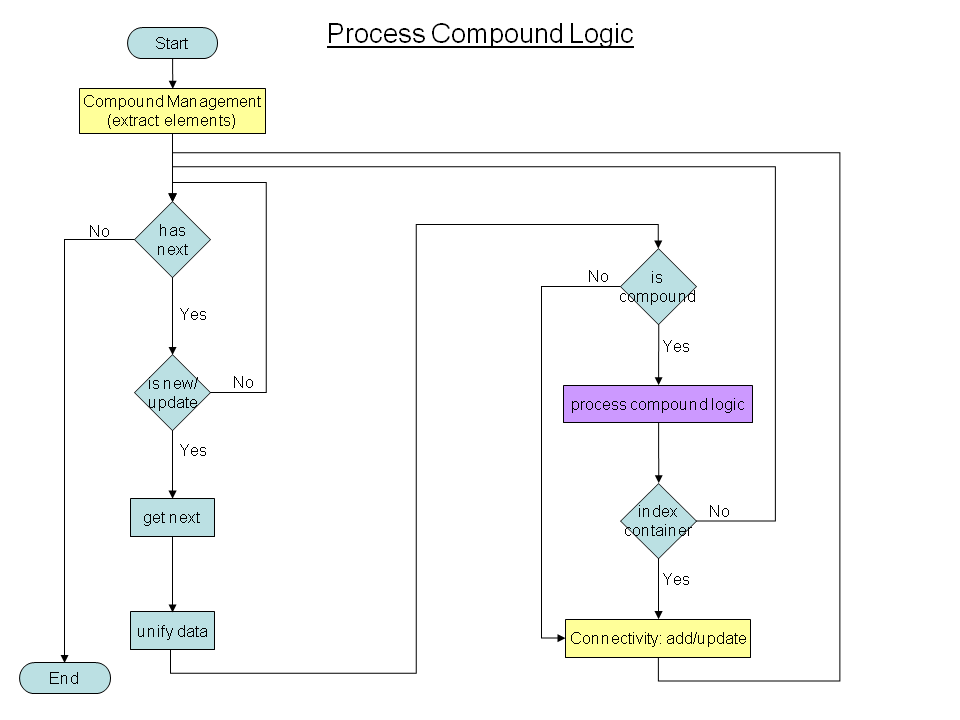

Process Compound Logic

This chart shows the Process Compound Logic in detail:

This processing logic is NOT implemented in the Component "Compound Processing", this component is only used to extract the data and provide functionality to iterate over it. This logic is implemented in the Agent\- and Crawler Controllers. The Process Compound Logic is very similar to the Crawler Controller Processing Logic. The main difference is that before iteration, the Compound object's elements have to be extracted (using the Compound Prosessing Component). Note that Process Compound calls itself recursively if an element is a Compound object. This is needed, because you may have zip in zip in ... {info:title=Note} The Process Compound Logic uses Delta Indexing Manager to determine if an element has to be added/updated. There is a special case in Agent Controller Processing Logic during "Add": Here the agent knows that the Compound object and all it's elements are new. No check against the Delta Indexing Manager is needed here. Also no check for objects to delete must be performed. This is not shown in the charts, as this is only a minor performance issue that complicates the process. Nevertheless, this logic should be implemented. {info} \\

Data Unification (unify data)

All data from various data sources has to be represented in a unified format. This includes functionality to normalize the data (e.g. one representation for date formats) and a renaming mechanism for data identifiers (mapping of attribute names). Both should be configurable.

The resulting format could be an XML like structure:

<attribute name="myName" type="myDataType"> <value>ABC</value> <value>XYZ</value> ... </attribute>

Each attribute has a name and a data type (string, int, double, date, boolean, byte\[\]). If an attribute has multiple values this can be represented by multiple <value> tags. In case of data type byte\[\], the <value> tag should NOT contain the bytes but a link to where the bytes are stored. {note:title=Dependencies} This whole process has to be specified in more detail. But the process depends on what data structures the following processes expect and how and where the data is stored. (e.g. XML database)

Juergen Schumacher :

We need an an XML format for SMILA objects anyway. Probably we should reuse it here instead of inventing a second one. It will have to be a bit more complex, though.

{note}

\\

Configuration

Configurations for Agents/Crawlers should share as much as possible and should be extendable for new DataSources. This has to be specified in detail. Here are some ideas what configurations should contain:

- unique ID

- Filter

- filename

- path

- mimetype

- filesize

- date

- ...

- entry points to continue aborted jobs

- sleep parameter (to avoid system overload/DOS)

Combining Agents and Crawlers

For certain use cases it may be desirable to easily combine Agent and Crawler functionality. E.g, an Agent monitors a folder structure in the filesystem. Because of some network problems the Agent doe not monitor all changes made in the meantime. A user may want the Agent to automatically synchronize the current state of the folder structure with the search index. Thus - the folder structure needs to be crawled using delta indexing.

Of course the IRM supports this use case, but the logic "what to execute when" has to be provided externally (by a user). Can we provide functionality that allows an Agent to execute a crawl on it's monitored data ? What are the requirements ?

- Agent and Crawler configurations should be interchangeable or harmonize

- Agents (or AgentController) needs a reference to CrawlerController

miscellaneous

The following issues must be addressed and specified in detail:

- error handling

- retry logic (connection failures, timeouts)

- synchronization: synchronization of accessing external data is desirable. I think it is possible to ensure that a certain Configuration (identified by an ID) is used only once at a time. This should be managed by the controllers. However, I think it's not possible to detect overlapping configurations,e.g. Config1 crawls C:\data, Config2 crawls c:\data\somwhere\else and Config3 watches C:\data\triggerfolder. This may even be more complicated using UNC paths and folder shares, or imagine the same data may be accessible via filesystem and http.

- what about access to single documents by ID (e.g. URI)? Should this be supported by Agents/Crawlers or should this be implemented in a separate service used in BPEL? Use case could be a scenario, where URLs are stored in a database. So a DB-Crawler would provide the URLs but how is the content of the URLs retrieved ?

Deployment & Implementation concept (OSGI-Bundles, (Remote) Communcation, Data Flow)

see SMILA/Project_Concepts/Deployment&Implementation Concept for the IRM Framework