Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

SMILA/Documentation/Importing/Crawler/Web

WebCrawler, WebFetcher and WebExtractor worker are used for importing files from a web server. For a big picture and the worker's interaction have a look at the Importing Concept.

Contents

Web Crawler Worker

- Worker name: webCrawler

- Parameters:

- dataSource: (req.) name of data source, used only to mark produced records currently.

- startUrl: (req.) URL to start crawling at. Must be a valid URL, no additional escaping is done.

- waitBetweenRequests: (opt.) long value in milliseconds on how long to wait between HTTP requests (default: 0).

- linksPerBulk: (opt.) number of links in one bulk object for follow-up tasks (default: 10)

- linkErrorHandling: (opt., default "drop") specifies how to handle IO errors (e.g. network problems) when trying to access the URL in a link record to crawl: possible values are:

- "drop": The record will be ignored and not added to the "crawledRecords" output, but other links in the same bulk can be processed successfully. A warning will be written to the log.

- "retry": The current task is finished with a recoverable error so that it might be retried later (depending on job/task manager settings). However, if the task cannot be finished successfully within the configured number of retries, it will finally fail and all other links contained in the same bulk will not be crawled, too.

- filters: (opt.) A map containing filter settings, i.e. instructions which links to include or exclude from the crawl. This parameter is optional.

- maxCrawlDepth: the maximum crawl depth when following links.

- followRedirects: whether to follow redirects or not (default: false).

- maxRedirects: maximum number of allowed redirects when following redirects is enabled (default: 1).

- stayOn: whether to follow links that would leave the host resp. domain or not. Valid values: host, domain (default: follow all links).

- Hint: implementation of stayOn 'domain' is currently very simple. It just takes the domain as the host name without the first part, if at least two host name parts remain. (www.foo.com -> foo.com, foo.com -> foo.com). Sometimes this won't fit (bbc.co.uk -> co.uk (!)), than you should use url patterns instead.

- urlPatterns: regex patterns for filtering crawled elements on the basis of their URL. Note that the crawler additionally reads the crawled sites' robots.txt. See below for details.

- include: if include patterns are specified, at least one of them must match the URL. If no include patterns are specified, this is handled as if all URLs are included.

- exclude: if at least one exclude pattern matches the URL, the crawled element is filtered out

- mapping (req.) specifies how to map link properties to record attributes

- httpUrl (req.) mapping attribute for the URL

- httpMimetype (opt.) mapping attribute for the mime type

- httpCharset (opt.) mapping attribute for character set

- httpContenttype (opt.) mapping attribute for the content type

- httpLastModified (opt.) mapping attribute for the link's last modified date

- httpSize (opt.) mapping attribute for the link content's size (in bytes)

- httpContent (opt.) attachment name where the link content is written to

- filters: (opt.) A map containing filter settings, i.e. instructions which links to include or exclude from the crawl. This parameter is optional.

- Task generator: runOnceTrigger

- Input slots:

- linksToCrawl: Records describing links to crawl.

- Output slots:

- linksToCrawl: Records describing outgoing links from the crawled resources. Should be connected to the same bucket as the input slot.

- crawledRecords: Records describing crawled resources. For resources of mimetype text/html the records have the content attached. For other resources, use a webFetcher worker later in the workflow to get the content.

Filter patterns and normalization

When defining filter patterns, keep in mind that URLs are normalized before filters are applied. Normalization means:

- the URL will be made absolute when it's relative (e.g. /relative/link -> http://my.domain.de/relative/link)

- paths will be normalized (e.g. host/path/../path2 -> host/path2)

- scheme and host will be converted to lower case (e.g. HTTP://WWW.Host.de/Path -> http://www.host.de/Path)

- Hint: The path will not be converted to lower case!

- fragments will be removed (e.g. host/path#fragment -> host/path)

- the default port 80 will be removed (e.g. host:80 -> host)

- 'opaque' URIs can not be handled and will be filtered out automatically (e.g. javascript:void(0), mailto:andreas.weber@empolis.com)

Configuration

The configuration directory org.eclipse.smila.importing.crawler.web contains the configuration file webcrawler.properties.

The configuration properties can contain the following properties:

- proxyHost (default: none)

- proxyPort (default: 80)

- socketTimeout (default: none, i.e. no socket timeout)

- userAgent (default: "SMILA (http://wiki.eclipse.org/SMILA/UserAgent; smila-dev@eclipse.org)").

- allowAllOn40xForRobots (default: false): Whether a website is crawled although the robots.txt was not accessible.

The configuration properties proxyHost and proxyPort are used to define a proxy for the web crawler (i.e. the DefaultFetcher class is using these configuration to configure its HTTP client) whereas the socketTimeout parameter defines how the fetcher's timeout is while retrieving data from the server. If you omit the socketTimeout parameter, the fetcher will set no timeout.

User-Agent and robots.txt

If you use SMILA for your own project, please change userAgent to your an own name, URL and email address. Apart from telling the web server who you are, the value is relevant for choosing the appropriate settings from the crawled sites' robots.txt files: The WebCrawler chooses the first set of "Disallow:" lines for which the "User-agent:" line is a case-insensitive substring of this configuration property.

It is currently not possible to ignore the robots.txt by configuration or job parameters.

Also, the web crawler uses only the basic robots.txt directives, "User-agent:" and "Disallow:" as defined in the original standard (see http://www.robotstxt.org/orig.html). That means it does not use "Allow:" lines, and the values in "Disallow:" lines are not evalutated as regular or other expressions. The crawler will just ignore all links that start exactly (case-sensitive) with one of the "Disallow:" values. The only exception is an empty "Disallow:" value meaning that all links on this site are allowed to be crawled.

Also, we do not observe "Crawl-delay:" parameters in robots.txt, so please take care that you use appropriate waitBetweenRequests (see above), taskControl/delay and scale-up settings for the web crawler worker yourself.

The robots.txt is fetched from the web server only once per site and job run. So changes in a robots.txt will not become effective until the crawl job is restarted.

The property allowAllOn40xForRobots defines whether a website is crawled although the robots.txt was not accessible. For example, if there is no robots.txt at all (so everything is allowed) but the part where to read the robots.txt from is secured (so we can't read it), then we can crawl the public parts of the site, when setting allowAllOn40xForRobots : true.

Configuring a proxy

You can configure the proxy the web crawler should use by defining the proxy in the configuration file (see above). E.g. to set up the web crawler to use a proxy at proxy-host:3128, use the following configuration:

proxyHost=proxy-host proxyPort=3128

Alternatively you can also use the JRE system properties http.proxyHost and http.proxyPort (see http://docs.oracle.com/javase/7/docs/technotes/guides/net/proxies.html for more information on proxy system properties).

Internal structure

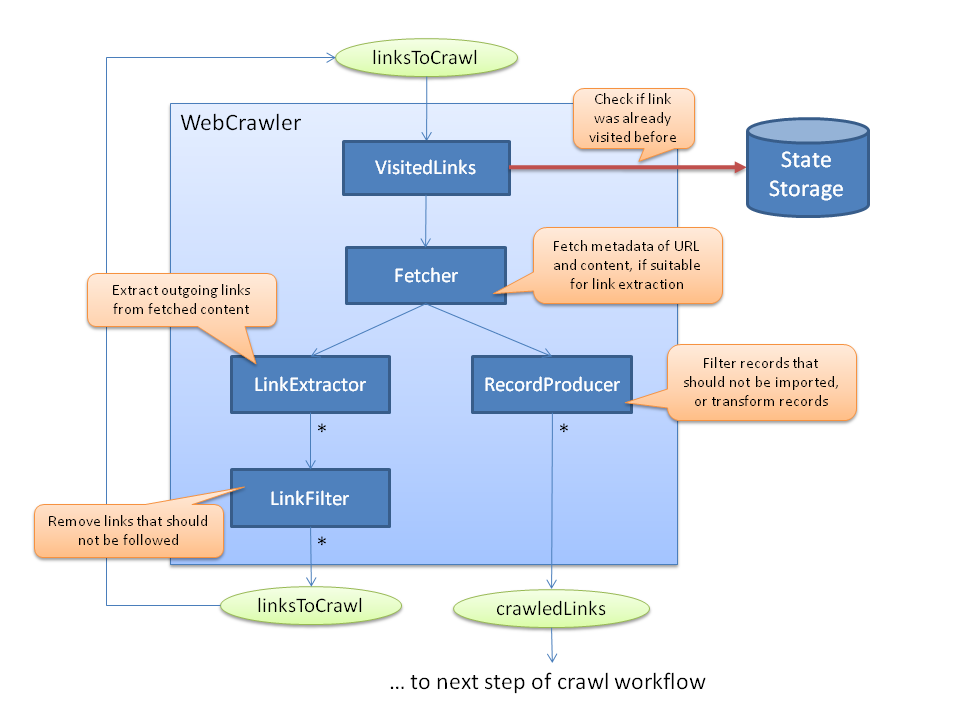

To make it easier to extend and improve the web crawler it is divided internally into components. Each of them is a single OSGi service that handles one part of the crawl functionality and can be exchanged individually to improve a single part of the functionality. The architecture looks like this:

The WebCrawler worker is started with an input bulk that contains records with URLs to crawl. (The exception to this rule is the start of the crawl process where it gets a task without an input bulk, which causes it to generate an input record from its configured startUrl parameter). Then the components are executed like this:

- First a VisitedLinksService is asked if this link was already crawled by someone else in this crawl job run. If so, the record is just dropped and no output is produced. Otherwise the link is marked as visited in the VisitedLinksService and processing goes on.

- The Fetcher is called to get the metadata (e.g. the mime type). If the mime type of the resource is suitable for link extraction, the Fetcher also gets the content. Otherwise the content will only be fetched in the WebFetcher worker later in the crawl workflow to save IO load.

- If the content of the resource was fetched, the LinkExtractor is called to extract outgoing links (e.g. look for <A> tags). It can produce multiple link records containing one absolute outgoing URL each.

- If outgoing links were found the current crawl depth is checked and if a maximum crawl depth is configured for this job and it is exceeded the links are discarded. The current crawl depth is stored in each link record (using the attribute crawlDepth).

- The LinkFilter is called next to remove links that should not be followed (e.g. because they are on a different site) or remove duplicates.

- In a last step the RecordProducer is called to decide how the processed record should be written to the recordBulks output bulk. The producer could modify the records or split them into multiple records, if necessary for the use case.

Both fetcher and link filter will check the input links against the robots.txt file of the site. To prevent multiple access to the same site's robots.txt, the disallowed links will be stored in the job-run data of the crawl job. Only the fetcher will actually read the robots.txt if it has not been read yet. The link filter will only use already cached settings.

Scaling

Outgoing links are separated into multiple bulks to improve scaling: The outgoing links from the initial task that crawls the startUrl will be written to an own bulk each, while outgoing links from later tasks will be written to separate bulks according to the linksPerBulk parameter. The outgoing crawled records are divided into bulks of 100 records at most.

Implementation details

- ObjectStoreVisitedLinksService (implements VisitedLinksService): Uses the ObjectStoreService to store which links have been visited, similar to the ObjectStoreDeltaService. It uses a configuration file with the same properties in the same configuration directory, but named visitedlinksstore.properties.

- DefaultFetcher: Uses a GET request to read the URL. Currently, authentication is not supported. Writes content to attachment httpContent, if the resource is of mimetype text/html and sets the following attributes:

- httpSize: value from HTTP header Content-Length (-1, if not set), as a Long value.

- httpContenttype: value from HTTP header Content-Type, if set.

- httpMimetype: mimetype part of HTTP header Content-Type, if set.

- httpCharset: charset part of HTTP header Content-Type, if set.

- httpLastModified: value from HTTP header Last-Modified, if set, as a DateTime value.

- _isCompound: set to true for resources that are identified as extractable compound objects by the running CompoundExtractor service.

- DefaultRecordProducer: Set record source and calculates _deltaHash value for DeltaChecker worker (first wins):

- if content is attached, calculate a digest.

- if httpLastModified attribute is set, use it as the hash.

- if httpSize attribute is set, concatenate value of httpMimetype attribute and use it as hash

- if nothing works, create a UUID to force updating.

- DefaultLinkExtractor (implements LinkExtractor: Simple link extraction from HTML <A href="..."> tags using the tagsoup HTML parser.

- DefaultLinkFilter: Links are normalized (e.g. fragment parts from URLs ("#...") are removed) and filtered against the specified filter configuration.

- The attribute crawlDepth is used to track the crawl depth of each link to support checking the crawl depth with the maxCrawlDepth filter: It's initialized with the maxCrawlDepth value for the start URL and decreased with each crawl step. If it reaches 0 in a linksToCrawl record, no links are extracted from this resource, but only a crawledRecord for the resource itself is produced.

Web Fetcher Worker

- Worker name: webFetcher

- Parameters:

- waitBetweenRequests: (opt., see Web Crawler)

- linkErrorHandling: (opt., default "drop") specifies how to handle IO errors (e.g. network problems that might resolve after a while) when trying to access the URL in a link record to crawl. It's similar to the Web Crawler parameter with the same name. Possible values are:

- "drop": The record will be ignored and not added to the "crawledRecords" output, but other links in the same bulk can be processed successfully.

- "retry": the current task is finished with a recoverable error so that it might be retried later (depending on job/task manager settings). However, if the task cannot be finished successfully within the configured number of retries, it will finally fail and all other links contained in the same bulk will not be crawled, too.

- "ignore": The error will be ignored, so the record (without the fetched content) is added to the output. A warning will be written to the log.

- filters:

- followRedirects: (opt., see Web Crawler)

- maxRedirects: (opt., see Web Crawler)

- urlPatterns: (opt., see Web Crawler) applied to resulting URL of a redirect

- include: (opt., see Web Crawler)

- exclude: (opt., see Web Crawler)

- mapping (req., see Web Crawler)

- httpUrl (req.) to read the attribute that contains the URL where to fetch the content

- httpContent (req.) attachment name where the file content is written to

- httpMimetype (opt., see Web Crawler)

- httpCharset (opt., see Web Crawler)

- httpContenttype (opt., see Web Crawler)

- httpLastModified (opt., see Web Crawler)

- httpSize (opt., see Web Crawler)

- filters:

- Input slots:

- linksToFetch: Records describing crawled resources, with or without the content of the resource.

- Output slots:

- fetchedLinks: The incoming records with the content of the resource attached.

The fetcher tries to get the content of a web resource identified by attribute httpUrl, if attachment httpContent is not yet set. Like the DefaultFetcher above it does not do authentication to read the resource.

Web Extractor Worker

- Worker name: webExtractor

- Parameters:

- filters: (opt., see Web Crawler)

- followRedirects: (opt., see Web Crawler)

- maxRedirects: (opt., see Web Crawler)

- urlPatterns: (opt., see Web Crawler)

- include: (opt., see Web Crawler)

- exclude: (opt., see Web Crawler)

- mapping (req., see Web Crawler)

- httpUrl (req., see Web Crawler) URLs of compounds have the compound link as prefix, e.g. http://example.com/compound.zip/compound-element.txt

- httpMimetype (req., see Web Crawler)

- httpCharset (opt., see Web Crawler)

- httpContenttype (opt., see Web Crawler)

- httpLastModified (opt., see Web Crawler)

- httpSize (opt., see Web Crawler)

- httpContent (opt., see Web Crawler)

- filters: (opt., see Web Crawler)

- Input slots:

- compounds

- Output slots:

- files

- Dependency: CompoundExtractor service

For each input record, an input stream to the described web resource is created and fed into the CompoundExtractor service. The produced records are converted to look like records produced by the file crawler. Additional internal attributes that are set:

- _deltaHash: computed as in the WebCrawler worker

- _compoundRecordId: record ID of top-level compound this element was extracted from

- _isCompound: set to true for elements that are compounds themselves.* _compoundPath: sequence of httpUrl attribute values of the compound objects needed to navigate to the compound element.

The crawler attributes httpContenttype, httpMimetype and httpCharset are currently not set by the WebExtractor worker.

If the element is not a compound itself, its content is added as attachment httpContent.

Sample web crawl job

Job definition for crawling from start URL "http://wiki.eclipse.org/SMILA", pushing the imported records to job "indexUpdateJob". An include pattern is defined to make sure that we only crawl URLs from "below" our start URL.

{

"name":"crawlWebJob",

"workflow":"webCrawling",

"parameters":{

"tempStore":"temp",

"dataSource":"web",

"startUrl":"http://wiki.eclipse.org/SMILA",

"jobToPushTo":"indexUpdateJob",

"waitBetweenRequests": 100,

"mapping":{

"httpContent":"Content",

"httpUrl":"Path"

},

"filters":{

"urlPatterns":{

"include":["http://wiki\\.eclipse\\.org/SMILA/.*"]

}

}

}

}